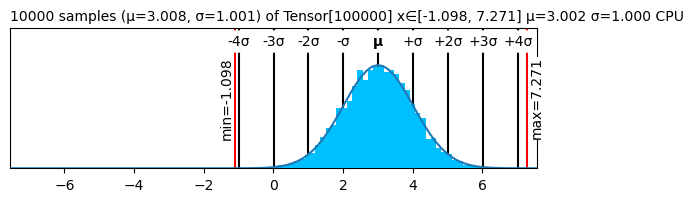

Tensor.manual_seed(42)

t = Tensor.randn(100000)+3

plot(t)📊 View as a histogram

plot

plot (x:tinygrad.tensor.Tensor, center:str='zero', max_s:int=10000, plt0:Any=True, ax:Optional[matplotlib.axes._axes.Axes]=None)

| Type | Default | Details | |

|---|---|---|---|

| x | Tensor | Tensor to explore | |

| center | str | zero | Center plot on zero, mean, or range |

| max_s | int | 10000 | Draw up to this many samples. =0 to draw all |

| plt0 | Any | True | Take zero values into account |

| ax | Optional | None | Optionally provide a matplotlib axes. |

| Returns | PlotProxy |

plot(t, center="range")plot(t, center="mean")plot((t-3).relu())plot((t-3).relu(), plt0=False)fig, ax, = plt.subplots(figsize=(6, 2))

fig.tight_layout()

plot(t, ax=ax);

# # |hide

# if torch.cuda.is_available():

# cudamem = torch.cuda.memory_allocated()

# print(f"before allocation: {torch.cuda.memory_allocated()}")

# numbers = torch.zeros((1, 64, 512), device="cuda")

# torch.cuda.synchronize()

# print(f"after allocation: {torch.cuda.memory_allocated()}")

# display(plot(numbers))

# print(f"after rgb: {torch.cuda.memory_allocated()}")

# del numbers

# gc.collect()

# # torch.cuda.memory.empty_cache()

# # torch.cuda.synchronize()

# print(f"after cleanup: {torch.cuda.memory_allocated()}")

# test_eq(cudamem >= torch.cuda.memory_allocated(), True)